What is Agentic RAG? The Next Evolution of AI-Powered Workflows

Imagine a biologist working on groundbreaking medicinal research. Although the biologist has a wealth of knowledge and understanding of his particular field, he can formulate hypotheses; now, during his research, he realized that he needed specific data or previous research findings to support his current experiment. In this scenario, he might ask the lab assistant to retrieve that particular experiment or information relevant to the current research. The lab assistant acts as an agent that retrieves data. The assistant then might stroll through journals, databases, and archives to find relevant studies and data that the biologist can use to validate his theory and enhance the research outcomes.

You may wonder what this example has to do with what we will see in the blog next; if you can understand the above analogy, you are on the right track to decode the agentic RAG system.

What is Retrieval-Augmented Generation (RAG)?

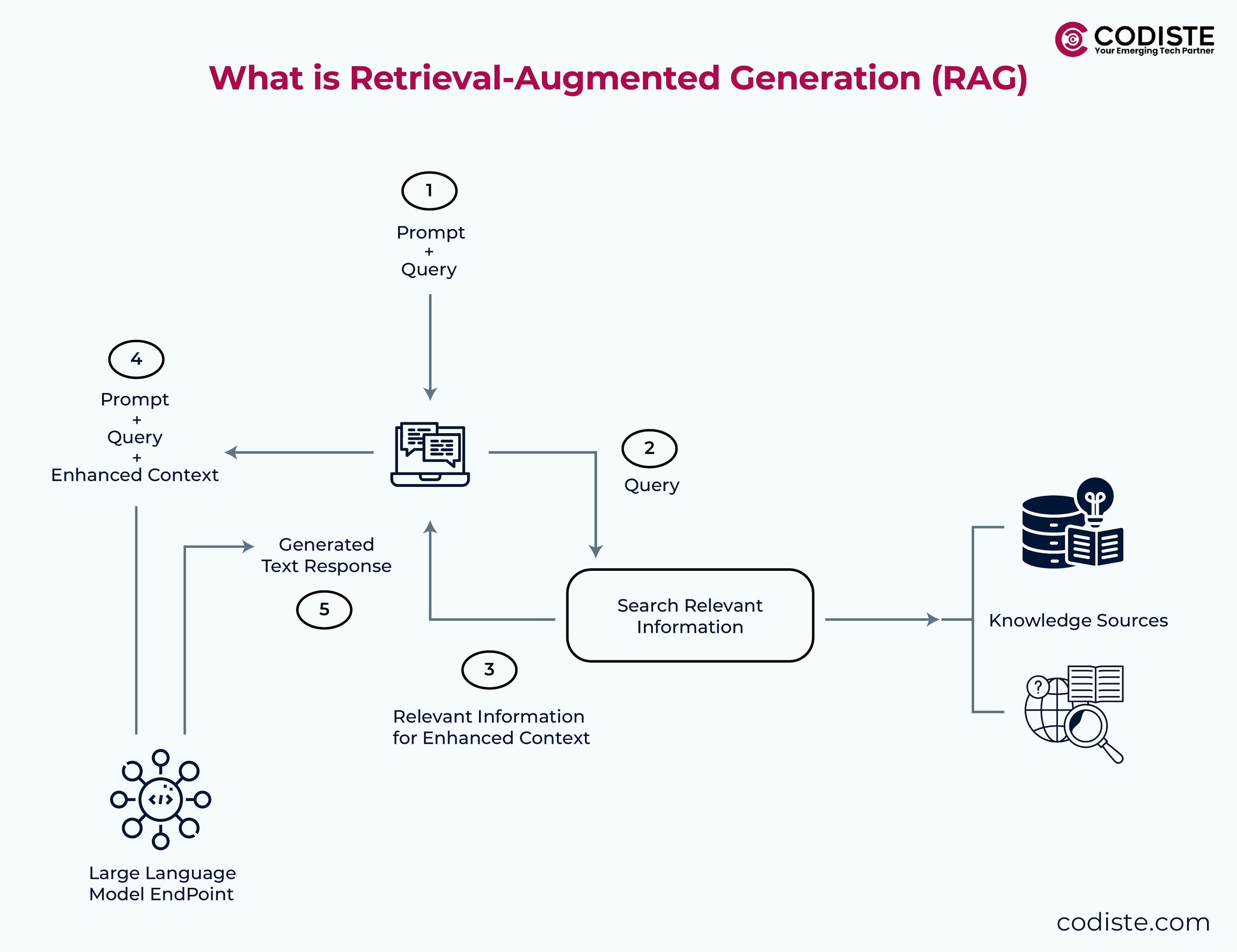

Retrieval-augmented generation (RAG) is where the outputs of large language models are optimized to reference an authoritative knowledge base outside of its training data sources before generating a response. Large Language Models (LLMs) are trained on vast volumes of data and use billions of parameters that generate original output for tasks like answering questions, language translation, and completing sentences.

RAG extends the already powerful capabilities of LLMs to specific domains or an organization's internal knowledge base, all without the need to retrain the model. It is a cost-effective approach to improving LLM output, so it remains relevant, accurate, and helpful in various contexts.

Additionally, RAG enhances retrieval and response generation by employing dynamic strategies that consider context, conversation history, and real-time observations, improving efficiency and effectiveness.

The Evolution of RAG Systems in AI

The evolution of Retrieval-Augmented Generation (RAG) systems has been marked by significant advancements in recent years. From its inception, RAG has been designed to combine the strengths of large language models with the ability to retrieve external information, enabling more accurate and contextually relevant responses.

Traditional RAG systems initially relied on a straightforward pipeline that included retrieval and generation components. However, this naive approach faced several limitations, including retrieval challenges, generation difficulties, and augmentation issues.

To address these limitations, researchers have continuously refined RAG systems. One of the key advancements has been the improvement of the retrieval and indexing process. Enhancing retrieval precision, reducing noise, and increasing the utility of retrieved information has significantly boosted the performance of RAG systems.

Top Reasons Why We Need Retrieval-Augmented Generation in AI Today

LLMs are essential AI technology powering NLP applications and intelligent chatbots. The purpose is to create chatbots that can respond to users' questions in various contexts by cross-referencing authoritative knowledge sources.

Unfortunately, LLM technology is unpredictable, and the training data LLM uses is static, with a cut-off date. Intelligent agents analyze and deconstruct user queries to enhance information retrieval and improve user interaction.

Known challenges faced by LLMs:

- LLMs tend to present generic or out-of-date information when the user expects a specific, current response.

- LLMs sometimes have presented false information when it does not have the answer.

- Creating a reaction from non-authoritative sources.

This leads to inaccurate responses due to terminology confusion. Meanwhile, different training sources can discuss other things using the same terminology.

Think of LLMs as that over-enthusiastic new employee who is unaware of current events but will always hop on to answer every question with absolute false confidence. Trust us; this is something that you won't want your chatbots to emulate!

RAG becomes the ultimate savior by redirecting the LLM to retrieve relevant information from authoritative, pre-determined knowledge sources. As a result, users now have greater control over the generated text output.

Key Advantages of Using Retrieval-Augmented Generation (RAG) in AI Solutions

RAG technology brings several benefits to an organization's generative AI efforts.

Cost-effective implementation

Chatbots are typically made using foundation models. These foundation models (FMs) are API-accessible LLMs trained on a broad spectrum of generalized and unlabeled data. The costs of retraining FMs for domain-specific or organizational information are high. RAG is a more cost-effective approach, making the GenAl technology widely accessible and usable.

Current information

Even if the original training data sources for an LLM satisfy your needs, it is challenging to maintain relevancy. RAG allows developers to provide the latest research, statistics, or news to the generative models by integrating multiple data sources.

Enhanced user trust

The LLMs can now present accurate information with source attributions and outputs, including citations and references to sources. Users can also look up source documents for more details and further clarifications. This can increase confidence and trust in your generative AI solution.

More developer controls

With RAG, developers can improve their chat applications more efficiently by controlling and changing the LLM's information sources to adapt to varied requirements or cross-functional usage. By integrating external tools, developers can enhance task execution and improve the quality of responses. Sensitive information can also be restricted to different authorization levels, and the LLM can be ensured to generate appropriate responses.

Why Naive RAG Falls Short and the Critical Role of Agents

While traditional RAG techniques provide an efficient means of enhancing generative AI models with relevant, real-time data, there are inherent limitations to relying solely on a simple RAG pipeline:

- Single-shot Interactions

The model generates a response based on a singular query without retaining the context for future interactions. - No Complex Query Understanding

Queries are processed at a surface level, with no inherent ability to plan or break down complex tasks into manageable components. - Lack of Reflection and Error Correction

RAG pipelines do not have mechanisms for evaluating the quality of the generated response or rectifying potential errors. - Absence of Multi-step Planning

RAG is often ineffective for queries requiring a sequence of tasks or conditional steps. - No Dynamic Tool Use

A naive RAG system cannot leverage external APIs or databases for more advanced actions or adapt its approach in real-time.

Agentic RAG represents a paradigm shift — an evolution that uses agents to address these shortcomings by allowing for more sophisticated interactions. Agents in this context can understand multi-step tasks, reason dynamically, and make decisions to solve complex problems autonomously. Additionally, these systems can evaluate retrieved data quality, ensuring better decision-making and response generation.

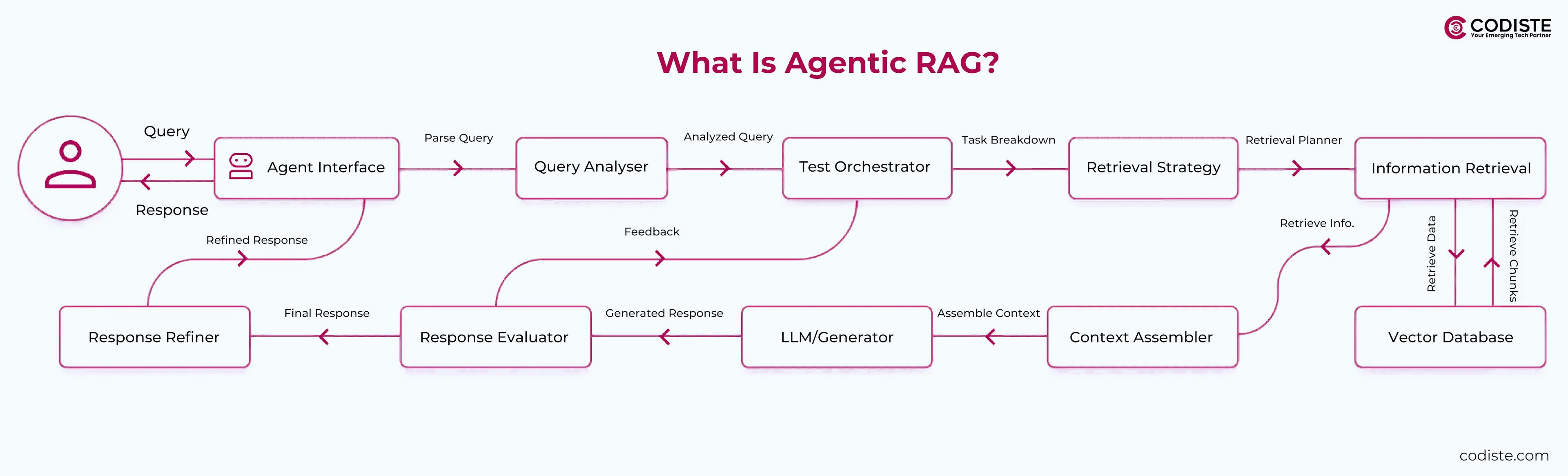

What is Agentic RAG?

Imagine an AI paradigm combining the AI agent's decision-making with RAG's ability to pull dynamic data for autonomous information access and generation, thus making AI systems more independent, flexible, and capable of tackling real-world problems independently.

There are two foundational components of agentic RAG - AI agent and RAG.

AI agent is an autonomous entity capable of perceiving its environment, making decisions, and taking action to achieve its goals. It takes its autonomy by incorporating reasoning and planning and exhibiting proactive qualities instead of mere reactive ones. Thus allowing AI to independently determine its following action instead of waiting for instructions.

On the other hand, Retrieval-Augmented Generation (RAG) bridges the gap between static AI models by dynamically retrieving up-to-date information from sources like databases or APIs, enabling them to generate contextually accurate and relevant responses.

Its versatility is demonstrated in fields like education, business, and healthcare, where real-time data is critical.

When those two are combined, the result is an AI assistant that doesn't just follow orders but actively solves problems independently.

Agentic AI to make your business processes 3x cheaper. How?

How Does Agentic RAG Work?

Based on the four pillars of Autonomy, Dynamic Retrieval, Augmented Generation, and Feedback Loop, Agentic RAG functions help this system not only know what needs to be done but also figure out where to find the necessary information.

Autonomous Decision making

Agentic RAG identifies what's needed to complete a task without waiting for explicit instructions. For instance, if it encounters an incomplete dataset or a question requiring additional context, it autonomously determines the missing elements and seeks them out. This independence allows it to function as a proactive problem-solver.

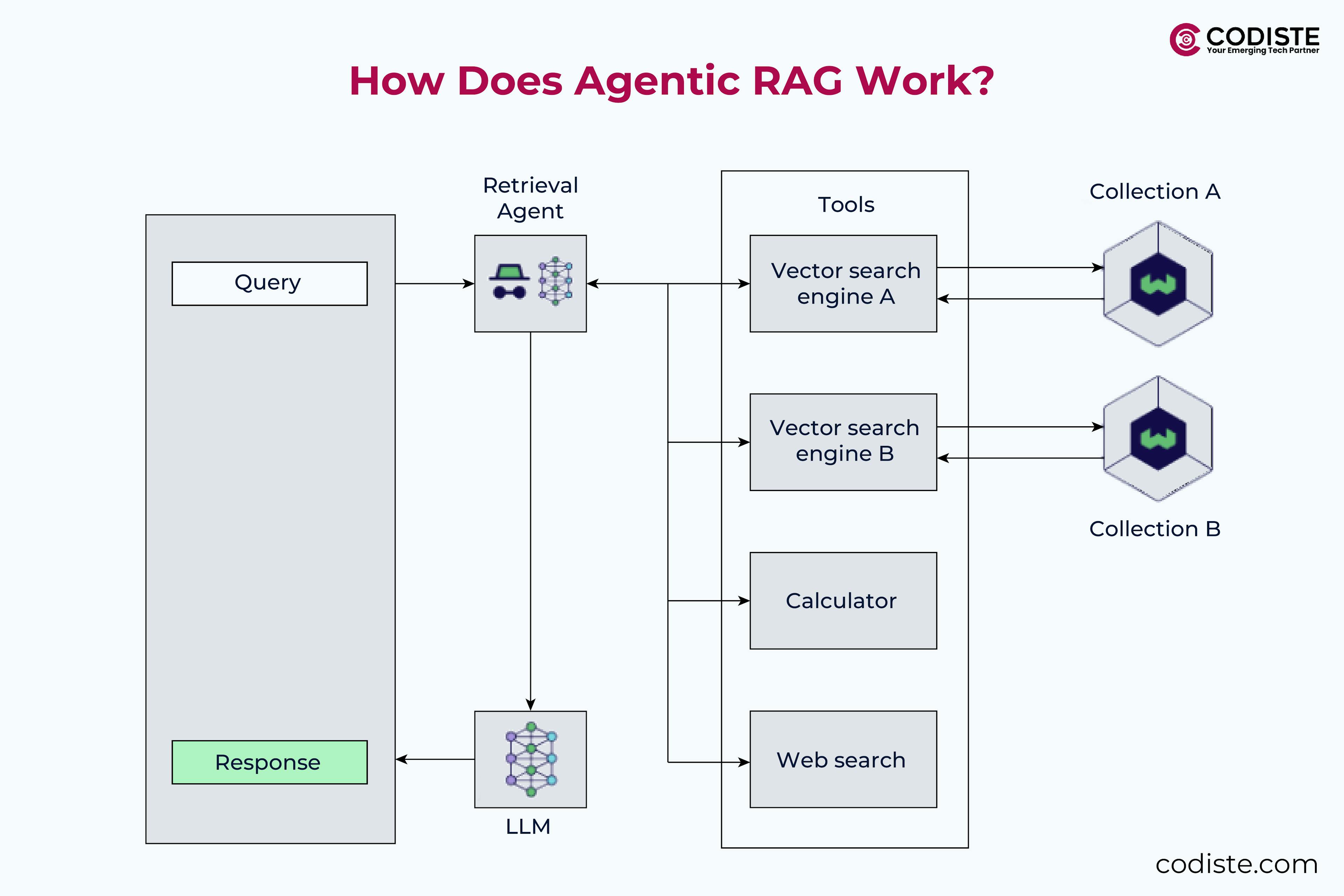

Dynamic Information Retrieval

Unlike traditional models that rely on static, pre-trained knowledge, agentic RAG dynamically accesses real-time data. It uses advanced tools like APIs, databases, and knowledge graphs to fetch the most relevant and up-to-date information. Whether it's current market trends or the latest research insights, this ensures its outputs are timely and accurate.

Augmented Generation for Contextual Outputs

Retrieved data isn't presented as-is—instead, agentic RAG processes and integrates it into a coherent response. It combines external information with its internal knowledge to craft outputs that are accurate, meaningful, and tailored to the context. This capability elevates it from a mere information retriever to an intelligent assistant.

Continuous Learning and Improvement

The system incorporates feedback into its process, refining its responses and adapting to evolving tasks. Each iteration makes the agentic development of RAG more competent and efficient, like a human improving their skills through experience. This feedback loop ensures long-term performance enhancement.

The Difference Between Agentic RAG vs. Traditional RAG

Traditional RAG systems operate reactively, depending heavily on predefined queries and explicit human guidance at each stage of the data retrieval process. These systems are limited by their reliance on structured input and their inability to deviate from the given instructions. They function as static information retrieval tools, retrieving data based solely on the specific query provided. This rigid approach limits their adaptability and problem-solving capabilities.

A good analogy for understanding traditional RAG would be going to a library with a specific list of books—you need to know what you're looking for, as the system won't assist beyond your instructions.

In contrast, agentic RAG systems are designed to be proactive and autonomous. Agentic RAG systems can autonomously retrieve and integrate relevant information from diverse sources, including real-time data streams and external APIs, by continuously analyzing the context and user intent. This proactive approach enables them to generate comprehensive and contextually relevant responses without requiring explicit human intervention. Optimizing the structure of a user query is crucial in improving retrieval quality and relevance in these systems.

Using the same analogy, agentic RAG is like hiring a research assistant who finds the best resources and organizes and summarizes them into a polished report, significantly saving time and effort.

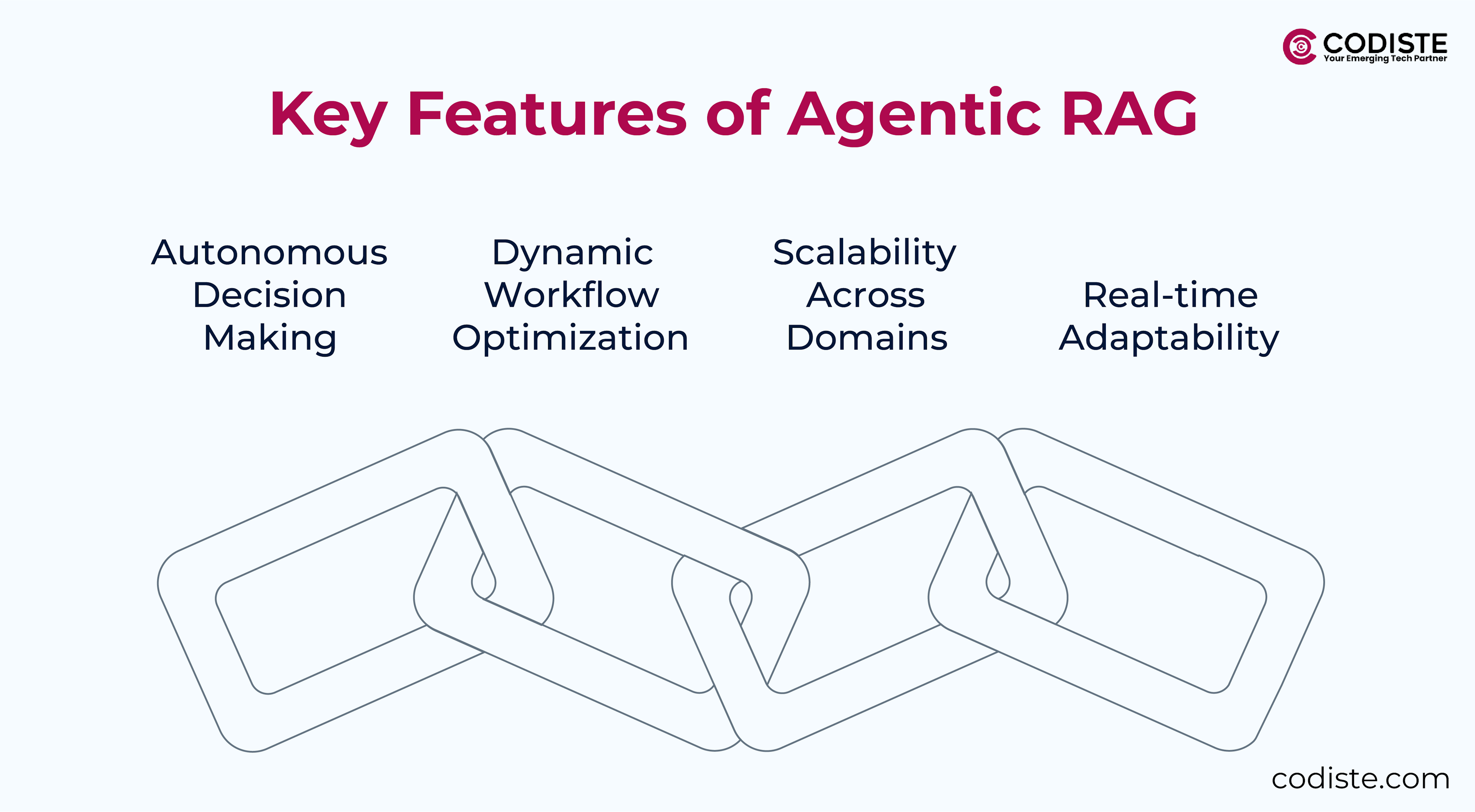

Key Features That Make Agentic RAG Unique

Agentic RAG isn't just an upgrade — it's a paradigm shift. Here's why:

1. Autonomous Decision Making

Unlike traditional RAG systems that follow predefined workflows, Agentic RAG uses intelligent agents to make autonomous decisions. These agents assess the retrieved data, identify gaps, and adjust the retrieval or generative processes as needed.

2. Dynamic Workflow Optimization

Agentic RAG continuously refines workflows based on real-time inputs. For example, in a customer support scenario, it could dynamically prioritize queries based on urgency or complexity.

3. Scalability Across Domains

With its modular and adaptive nature, Agentic RAG can scale seamlessly across industries — from healthcare and finance to e-commerce and education.

4. Real-time Adaptability

Traditional systems struggle with rapidly changing environments. Agentic RAG thrives in such scenarios by adapting to new information or contexts without manual intervention.

Enhancing Retrieval-Augmented Generation (RAG) with Advanced AI Agents

Agentic RAG integrates agents into the RAG pipeline to perform complex operations, enabling an enhanced decision-making process that is multi-step, reflective, and adaptive. As autonomous units within an agentic development RAG framework, Rag agents perform specialized tasks to improve the efficiency and performance of retrieval and generation processes.

Below, we explore key aspects of Agentic RAG.

1. Routing and Tool Use with Multiple Agents

The simplest form of agentic reasoning involves routing and tool use. A routing agent selects the best LLM or tool to handle a specific query based on its type, enabling agents to interact with external resources through a toolkit that integrates functionalities like search and database management. This allows context-sensitive decisions, such as whether a document needs a summary or more detailed parsing.

2. Memory for Persistent Context

Memory is crucial for maintaining context across multiple interactions. While naive RAG handles each query independently, memory-enabled agents in Agentic RAG can utilize vector databases to manage conversation histories, enabling more coherent and contextually aware responses over time.

3. Planning with Sub question Engines

Complex queries often need to be broken down into smaller, manageable tasks. This approach exemplifies the essence of multi-step planning, allowing agents to explore different aspects of a query systematically.

For example, if asked to compare the financial performance of two companies, an agent using the Subquestion Query Engine can retrieve data on each company separately and then generate a comparative analysis based on the individual results.

4. Reflection and Error Correction

Reflective agents go beyond merely generating a response — they evaluate the quality of their output and correct it if necessary. This capability is essential for ensuring that responses are accurate and align with the intended objectives of the enterprise.

5. Advanced Agentic Reasoning: Tree-based Exploration

Tree-based exploration is used when agents must explore multiple potential pathways to achieve a goal. Unlike sequential decision-making, tree-based reasoning allows an agent to evaluate several strategies simultaneously, selecting the most promising one based on real-time evaluation metrics.

Looking for AI agent implementation in your business?

Why Adaptability is Key for Autonomous AI Agents

AI agents are intelligent software entities that perceive and respond to their environment. They are designed to operate autonomously, making decisions and taking actions based on their programming and the information they receive. Autonomous AI agents are essential for sequential decision-making, flexibility, and planning tasks.

AI agents can be categorized into several types, including simple reflex agents, model-based reflex agents, goal-based agents, and utility-based agents. Each type of agent has its strengths and weaknesses, and the choice of agent depends on the specific application and requirements.

Simple reflex agents are the most basic type of agent and react to the current state of the environment without considering future consequences. Model-based reflex agents have an internal model of the environment and can make decisions based on this model. Goal-based agents have a specific goal and make decisions based on achieving this goal. Utility-based agents assign numerical values to different states or outcomes and make decisions based on maximizing these values.

These agents are crucial in enabling RAG systems to perform complex tasks autonomously. By incorporating reasoning and planning, AI agents can independently determine their subsequent actions, making them proactive problem-solvers rather than mere reactive entities.

Streamlining Complexity in Agentic RAG with Workflows

As AI systems evolve, the complexity of applications also increases. To manage this complexity, businesses need workflows that provide an abstraction for managing multi-step, agent-based interactions. Workflows define the entire flow of actions that an agent must execute, allowing developers to create sophisticated, conditional logic without losing control of the overall structure.

- Sequential and Looping Workflows

Simple workflows may consist of sequential tasks, whereas more advanced ones might include loops for self-reflection and improvement. - Shared Global State

Workflows share a global state, simplifying data management across multiple steps. This shared state is essential for maintaining consistency across different stages of a multi-agent process.

Using workflows, developers can build more advanced, production-ready applications that retain clarity and modularity even as complexity grows.

How Agentic RAG is Transforming Industries

The application of Agentic RAG in enterprise environments extends across multiple domains, each benefiting from the enhanced reasoning and adaptability of agents:

- Customer Service and Support

Beyond simply fetching information, autonomous agents can use agentic RAG to adapt their responses to the specific context of a customer's issue. Agents can interact with customers in a multi-turn dialogue, use RAG to retrieve relevant information, and even execute actions such as booking appointments or resolving issues — all in a seamless, context-aware manner. Agentic RAG can also enable agents to learn from past interactions, continuously improving their ability to handle complex customer inquiries and personalize their support. - Market Analysis and Reporting

By leveraging subquestion planning, agents can gather and compare market data, generate insights, and prepare detailed reports autonomously. These capabilities reduce manual workloads and enhance the accuracy and timeliness of business insights. - Knowledge Management

Large organizations often struggle to use their internal knowledge bases efficiently. Agentic RAG systems can retrieve relevant data, refine searches, and provide summaries that aid department decision-making, facilitating better collaboration and knowledge dissemination. - Automated Business Workflows

Multi-agent systems can handle intricate workflows like procurement processes or financial audits. By breaking down tasks, coordinating between agents, and leveraging memory, the system can autonomously manage routine operations, freeing employees to focus on more strategic work. - Education

Intelligent tutoring systems powered by agentic RAG can adapt their teaching strategies to individual student needs, providing personalized learning experiences. These systems can optimize learning outcomes by dynamically retrieving and presenting educational content based on a student's current understanding and learning style. - Healthcare

The ability of agentic RAG to synthesize up-to-date medical research can improve clinical decision-making. Providing clinicians with evidence-based recommendations tailored to a patient's specific condition and medical history can improve diagnostic accuracy and treatment outcomes. Moreover, agentic RAG can assist in identifying potential drug interactions or adverse effects, enhancing patient safety. Additionally, it can aid medical education by providing medical students and residents access to relevant research and clinical guidelines. - Business Intelligence

Agentic RAG can improve the process of generating business reports by automating the retrieval and analysis of key performance indicators (KPIs). This can save analysts countless hours and enable them to focus on higher-level tasks such as interpreting insights and formulating strategic recommendations. - Scientific Research

Researchers can use agentic RAG to quickly identify relevant studies, extract key findings, and synthesize information from diverse sources.

Key Technical Challenges in RAG and How to Overcome Them

Implementing Agentic RAG is not without its challenges:

- Complexity of Coordination

Effective communication between multiple agents requires sophisticated management of shared memory, context, and workflows. - Latency

The increased complexity of agentic interactions often leads to longer response times. Striking a balance between speed and accuracy is a critical challenge. - Evaluation and Observability

As Agentic RAG systems become more complex, continuous assessment and observability become necessary. LlamaIndex integrates tools like Arise Phoenix to trace agent actions, providing visibility into agents' internal workings and performance. - Retrieval Accuracy

Ensuring the data fetched is relevant and high quality. - Integration Complexity

Balancing the interaction between agentic AI, retrieval systems, and generative models can be difficult. - Bias and Fairness

Avoiding biases in training data and retrieved content remains a critical concern. - Scalability

Managing real-time operations at scale can come with many scalability challenges.

Conclusion

Over the coming years, AI workflow optimization will shift from tools that assist to systems that act, adapt, and deliver meaningful results with minimal human intervention. Any AI agent development company using agentic RAG will represent that leap forward by offering a means to address the limitations of naive RAG while unlocking new possibilities for automation, decision-making, and process optimization.

By integrating multi-agent systems with sophisticated RAG pipelines, enterprises can achieve unprecedented intelligence and autonomy in their operations. For enterprises seeking to gain a competitive edge in an increasingly data-driven world, Agentic RAG offers a robust, adaptable, and highly effective solution poised to shape the future of AI-powered business automation.

Suppose you found this exploration of Agentic RAG insightful. In that case, We must continue the conversation about how intelligent, dynamic AI systems are changing the world, as the future of generative AI lies in agentic AI — where LLMs and knowledge bases are dynamically orchestrated to create autonomous assistants.

Suppose you are an enterprise or a start-up seeking to drive business processes with AI-driven agents. In that case, Codiste can help create agents to enhance decision-making, adapt to complex tasks, and deliver authoritative, verifiable results for our clients. Let's connect to explore all the synergies.

Is Agentic RAG Worth the Investment? Age...

Know more

What is an AI Voice Agent for Customer C...

Know more

The Future of AI Automation: Agentic RAG...

Know more