Artificial intelligence models focused on generation have experienced considerable development and increased scrutiny lately. Venture capitalists, lawmakers, and the general public are participating in dialogues surrounding advanced creations such as ChatGPT and Google Bard. Several startups in this sector have secured significant financial backing over the past year, mirroring this growing fascination. Generative artificial intelligence possesses tremendous capabilities to spark innovative remedies, establishing it as a crucial advantage for forward-thinking organizations.

So, it's no surprise that generative AI is making waves. Folks are excited about coming up with creative new solutions. This blog will give you a solid understanding of generative AI. Plus, we will walk you through the steps to build your very own generative AI systems.

Generative AI is a kind of artificial intelligence that makes its unique content. It gets its clues from existing data like text, pictures, or sounds. This AI creation can help in many ways, like making art and music, writing stuff, boosting ads, increasing data, and even making data for areas with not enough real info. Several methods help generative AI, such as transformers, generative adversarial networks (GANs), and variational auto-encoders. Transformers like GPT-3, LaMDA, Wu-Dao, and ChatGPT are skilled at understanding and figuring out the importance of inputs. They're good at learn-to-make good texts or images. Generative AI models can look at a ton of data, see the patterns, and then make new, high-quality results.

Generative adversarial networks (GANs) are composed of two neural networks - a generator and a discriminator - that work in tandem to find balance. The generator creates new data and content that mimics real source data. Meanwhile, the discriminator tries to differentiate between the generated data and the source data, getting better at recognizing which more closely resembles the authentic information. This back-and-forth leads both networks to improve over time.

Variational auto-encoders utilise encoding to condense input into a coded form of representation. This code encapsulates the distribution of the input data in a less dimensionally extensive style. It is then used by the decoder part to recreate the original input. Reducing information into this compressed code, variational auto-encoders offer an effective yet powerful approach for generative AI to grasp data distributions in compact representations. The encoder-decoder arrangement aids generative models in reconstructing realistic outputs.

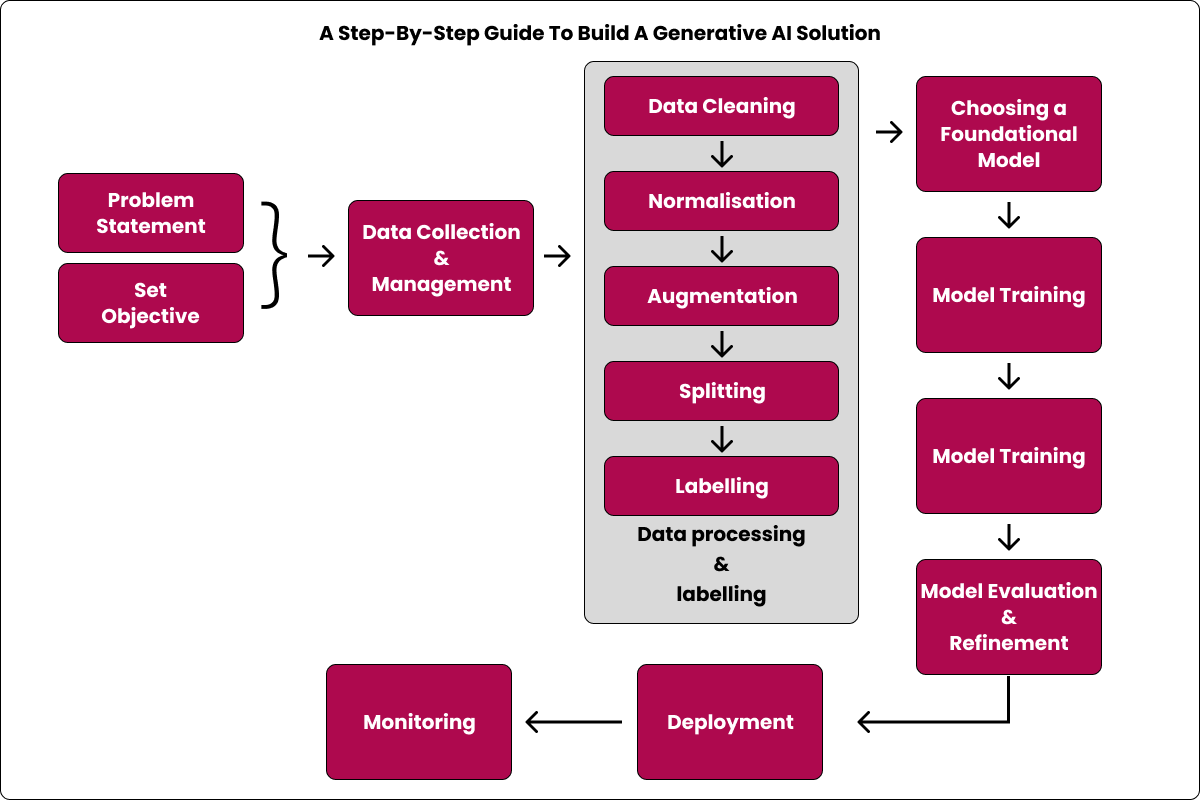

Developing a generative AI system demands profound knowledge of the technology and the particular issue it seeks to address. It entails creating and coaching AI models to produce new outputs using input data. Several vital stages are necessary for assembling an effective generative AI solution: determining the problem, accumulating and pre-processing information, choosing suitable algorithms and models, honing and fine-tuning the models, and instituting the system in a real-life framework. Now, let's get deeper into this process.

Building good generative AI starts with clearly defining the problem and desired outputs. Be it generating text, images, or sounds, being specific about needs is key to tackling unique challenges and collecting suitable data.

Details of target outputs then guide model complexity and data needs. For text, consider language and style constraints. For images, resolution, artistic styles like color schemes, etc. For music/sounds, audio formats, and genres.

With clear goals, explore technical approaches suited for the data - RNNs or Transformers for text, CNNs for images. Know model strengths and limits, like GPT-3's coherent short texts but not long passages, to set realistic expectations. Concrete metrics will eventually evaluate outcomes.

Good AI needs lots of high-quality, ethically obtained data. Data sources should have diverse, relevant examples like the AI's intended uses. For images, varied captures from different angles, lighting, and backgrounds are best. For text, diversity of language is key.

Preparing the data is also crucial. Cleaning removes errors and inconsistencies. Normalisation and augmentation make models more flexible. Extracting key features boosts efficiency. Splitting data into training, validation, and testing sets prevents overfitting. Labelling assists supervision by categorising examples manually.

A thoughtful data preparation process ensures clean, robust datasets that optimally empower model creation. Continued data versions enable improvement as tasks and data change over time. A balanced approach considers both technical needs and ethics for responsible AI.

Once data is collected, it must be prepared and enriched before it can be used for training AI models. This involves cleaning, normalising, augmenting, and labelling the data:

Careful data processing and enrichment results in clean, robust datasets that can effectively train AI models.

Pre-trained models like GPT, LLaMA, and Palm give AI generators a strong starting point. This saves time and computing power compared to training from scratch. Their massive data exposure lets them learn general patterns and knowledge.

Pick foundations that match your goal. GPT is good for text like chatbots because its transformer design handles paragraph coherence well. LLaMA enables multilingual output. Palm's strengths depend on its evolving skills.

Also consider available data, infrastructure limits, how flexible the model is for customization, and community support. These impact how easily you can tailor the foundation later.

Building on top of robust general foundations by fine-tuning them for your particular dataset and needs efficiently creates custom AI generators. Choose bases that technically fit your architecture and use case. Ensure you have the resources to fully leverage their capabilities.

Generative AI involves feeding prepared data to models using neural networks and deep learning for pattern identification and emulation. Once a foundational model is adequately trained, fine-tuning becomes imperative. This refines the model for specific tasks or domains—for example, generating poetry by training on poetic text.

Fine-tuning entails adjusting the model's weights using particular datasets to align outputs more closely with desired outcomes. Techniques like differential learning rates (training different layers at different rates) help. Tools like Hugging Face's Transformers library simplify fine-tuning many foundation models.

After training, evaluating model efficacy by quantifying output similarities to actual data is imperative. However, evaluation alone is insufficient; refinement through continuous adjustments for improving accuracy, reducing inconsistencies, and enhancing quality is pivotal.

While evaluation provides performance snapshots, relentless refinement ensures continued relevance, robustness, and efficacy despite evolving environments, data, and requirements.

When ready, models require thoughtful deployment guided by transparency, fairness, and accountability ethics. Continuous monitoring afterward remains imperative—regular checks, feedback collection, and metrics analysis ensure lasting efficiency, accuracy, and ethical soundness across scenarios.

Deployment transitions models to complex real-world contexts. Monitoring keeps them functionally and ethically sound over time—a joint path of technology and ethics for responsible AI.

Curious about the magic behind Generative AI?

This section explores the key components, algorithms, and frameworks underlying generative AI systems.

Application frameworks rationalise and incorporate new advancements to modernize generative AI adoption. Popular options like LangChain, Fixie, Microsoft's Semantic Kernel, and Google Cloud's Vertex AI simplify creating and updating apps that:

These frameworks are changing how we work. They make it easier for developers to add generative AI to apps. This translates new research into real-world solutions.

As generative AI advances quickly, good frameworks help integrate it with existing tech. They will be key for businesses using generative AI in different industries.

Foundation Models (FMs) are the intelligent core of the system, capable of human-like reasoning. Developers can choose from various pre-trained FMs based on factors like output quality, supported modalities, context size, cost, and latency. Leading options include proprietary models from vendors like Anthropic and Cohere, popular open-source models, or developers can train their custom models.

Large language models can only understand things from their training data. Developers can feed them more data to make them smarter. They can add structured data like databases. They can also add unstructured data. Vector databases help store and access the vector representations used by these models. Relevant data can be included in prompts with retrieval-augmented generation. This makes the outputs more personalised without extra training. So the models can give customised results at large scale. Adding more data makes them better at specific tasks.

Developers need to balance tradeoffs between accuracy, cost, and speed. They can improve performance by iteratively refining prompts, fine-tuning models, and testing different vendor options. Evaluation tools help with prompt engineering, tracking experiments, and monitoring live systems. Developers can get the best accuracy at an affordable cost and speed by carefully optimising.

Once generative AI applications are ready for production, AI developers have deployment options. They can choose self-hosting and frameworks like Gradio to get more customization and control. Turnkey services like Fixie allow teams to seamlessly build, share, and deploy AI agents without infrastructure burdens. With the complete generative AI stack transforming how we create and process information, flexible deployment options help developers. They let developers focus on innovation while still ensuring reliability, scalability, and proper governance. The right deployment strategies make it easier to get generative AI applications live so they can start delivering value.

Generative AI can have a major impact in many creative and technical fields. This modern technology can assist with tasks like programming, writing, visual arts, and other design work.

Generative AI offers exciting opportunities. Many forward-thinking companies are using their robust power to establish, service, and monitor complex systems with ease. This advanced AI technology helps organisations boost automation and remain adaptable in this fast-moving world.

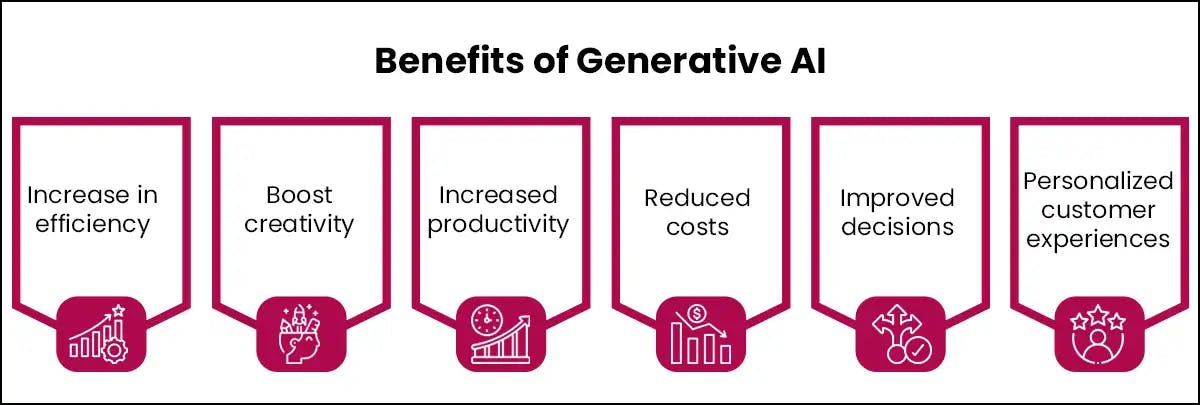

As we bring the advancements of generative AI, we uncover its value in a wider range of areas using advanced AI technologies. It delivers amazing advantages with its domain-specific generative AI models and solutions. We're only seeing the start of more opportunities. Generative AI equips enterprises with an edge, providing unmatched innovation, efficiency, speed, and precision.

Generative AI brings many advantages and big wins for business. Big companies that use AI place themselves at the top. There is no limit to what we can do with AI in creating new things and growing.

Want to implement Generative AI and large language model software services in your business? Our Generative AI specialists at Codiste are ready to help with your project needs. Codiste's AI developers have expertise in developing models that improve decision-making, optimise cost, and so forth.

Let's connect with Codiste for expert advice on what's coming and guide the way.

Every great partnership begins with a conversation. Whether you’re exploring possibilities or ready to scale, our team of specialists will help you navigate the journey.