,

How do you measure the effectiveness of your gen AI? What if we say there are AI metrics and specific KPIs for AI agents that'll help track accuracy, operational success metrics, efficiency, user engagement, and financial impact, ensuring that your investments deliver tangible AI agent ROI analysis that can measure cost savings and revenue growth?

Key performance indicators (KPIs) are essential for tracking progress and aligning initiatives like AI performance metrics with other business objectives. AI technology is crucial in evolving these metrics and KPIs to match its dynamic applications. When adopting GenAI, KPIs are critical in assessing model performance, using market trends, enabling data-driven insights for AI implementation adjustments, and demonstrating value. However, many organizations rely solely on computation-based KPIs used for traditional AI, overlooking crucial metrics like system performance, adoption, and business value—often mistaking operational efficiency gains for actual end goals.

In this post, we'll dive deeper into the KPIs essential for measuring the effectiveness of gen AI, including all the specific metrics and how to use them to make the most of your investments.

Model Quality KPIs For Evaluating Model Performance

Model quality metrics are key to assessing the accuracy and effectiveness of AI outputs. Evaluating a model's performance involves visualizing its effectiveness through various metrics, such as confusion matrices and qualitative evaluations. Computation-based metrics, like assessing a product search model against AI's ability to work well for bounded outputs. Precision measures relevance, recall captures completeness, and the F1 score provides a balanced average between the two.

Generative AI's capacity to produce diverse outputs—from original to potentially harmful content—necessitates a subjective evaluation approach. Model-based metrics utilize auto-raters, which are large language models designed for assessment using machine learning, to evaluate performance based on criteria such as creativity, accuracy, and relevance. These judge models can automatically analyze responses using an evaluation template to measure output quality effectively.

There are two standard methods to produce quantitative metrics about a model's performance:

- Pointwise metrics assess the candidate model's output-based net promoter score on the evaluation criteria. These metrics work well in cases where defining a scoring rubric is not tricky. For example, the score could be 0-5, where zero means the response does not fit the criteria, while five means the response fits the criteria well.

- Pairwise metrics involve comparing the responses of two models and picking the better one to create a win rate. This is often used when comparing a candidate model with the baseline model. These metrics work well in cases where it’s difficult to define a scoring rubric and preference is sufficient for evaluation.

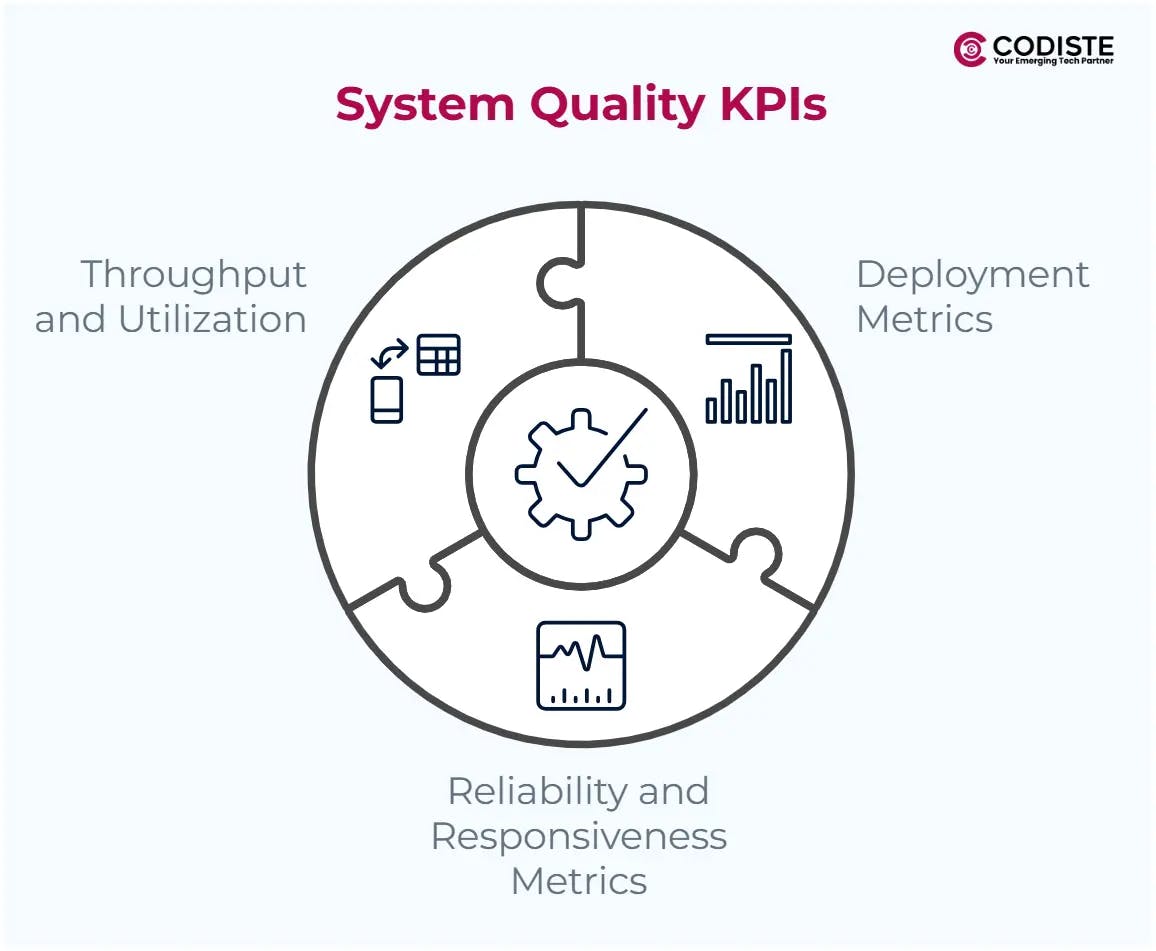

System Quality KPIs

As we've written before, you'll need to invest in an end-to-end AI platform to harness the full potential of gen AI and enable your organization. These platforms must seamlessly integrate all the key components required to develop, fine-tune, deploy, and manage models at AI performance and scale while measuring the performance of various aspects of an AI system, including deployments, responsiveness, and resource utilization.

System metrics focus on the operational aspects of your AI system, ensuring it runs efficiently, reliably, and at scale to support the needs across your organization. They offer insights into the health, performance, and impact of your AI platform and infrastructure.

Deployment Metrics

Tracking how many pipelines and model artifacts are deployed provides insights into your AI platform's capacity, governance, and organization-wide impact. Selecting appropriate metrics for the success of your AI project is crucial, as it helps align project objectives with business goals. Here are some of the most commonly used metrics:

- Number of deployed ML models

This metric measures the number of models currently serving predictions to users or applications. This metric may indicate if you are a builder or a buyer regarding AI. - Model time to deployment

This metric measures the average time it takes to deploy a new model or update an existing one, measuring the velocity of your deployment processes. This metric can help highlight bottlenecks in your deployment pipeline. - Percentage of automated pipelines

This measures the percentage of automated workflows throughout the entire lifecycle of your AI models. This metric helps understand how much manual effort is required and where to invest in automation. - Percentage of models with monitoring

This measures the number of deployed models actively monitored for changes in data distribution or model performance degradation. This metric is essential for maintaining and evaluating model performance and the effectiveness of accessible data over time.

Reliability and Responsiveness Metrics

Tracking how quickly your AI platform responds to requests is critical for user experience and maintaining model accuracy and application performance. Here are some of the most commonly used metrics:

- Uptime

The percentage of time your system is available and operational. A higher uptime indicates more excellent reliability and availability. - Error Rate

The percentage of requests that result in errors. Understanding error types can provide valuable insights into underlying system issues, such as quotas, capacity, data validation, user input error, and more. - Model Latency

The time your gen AI model takes to process a request and generate a response. This metric can identify subpar user experiences and signal needs for a hardware upgrade implementing a call. - Retrieval Latency

The time it takes for your system to process a request, retrieve additional data from applications, and return the data processing a response. Optimizing retrieval latency is crucial for applications that rely on real-time data.

Throughput and Utilization

Tracking throughput and resource utilization can reveal the processing capacity of your system. These metrics can help you optimize performance, manage costs, and allocate resources more effectively. Here are some of the most commonly used performance metrics below:

- Request throughput

The volume of requests your system can handle per unit of time. It can identify the need for burst capacity to accommodate high request volumes and minimize HTTP 429 errors (Too Many Requests). - Token throughput

The volume of tokens on an AI platform per unit of time. As foundation models introduce new modalities and larger context windows, this metric is critical for ensuring appropriate sizing and usage. - Serving nodes

The number of infrastructure nodes or instances handling incoming requests. This metric helps monitor capacity and ensure adequate resources meet steady and peak state demand. - GPU/TPU accelerator utilization

Measures the percentage of time specialized hardware accelerators like GPUs and TPUs are actively engaged in processing data. As AI infrastructure usage grows, this metric is crucial for identifying bottlenecks, optimizing resource allocation, and controlling costs.

When to use them

The metrics you choose will depend on your AI platform and the type of gen AI model you choose. For example, using proprietary models like Gemini means critical metrics, such as required serving nodes, target latency, and accelerator utilization, are handled by a Google-managed service so you can focus on building your application via simple APIs. If using open models and hosting it yourself, you may need to incorporate a broader range of system quality metrics to help identify bottlenecks and optimize your AI system's performance.

Our custom AI development solutions can transform your business.

Business Operational KPIs For AI Agents

Operational metrics track how AI performance metrics can impact business processes and outcomes, varying by solution and industry. Developing frameworks for measuring AI success is crucial, as it involves addressing complexities such as data management, establishing specific KPIs, and incorporating both objective metrics and subjective evaluations. Changes in AI systems may affect KPIs differently; for instance, a retailer's chatbot might boost cart size but increase time-to-cart. Context and industry expertise are crucial for interpreting such shifts.

Here are some examples of AI implementation of business operational metrics used to track impact across the business strategies and most common gen AI use cases in different industries.

Customer Service Modernization For Customer Satisfaction

Enterprises are leveraging generative AI and AI agent implementation to transform customer service, driving personalization and productivity improvements across telecommunications, travel, financial services, and healthcare sectors. Key metrics such as call and chat containment rates, average handle time, customer satisfaction, and churn provide insights into AI’s impact on efficiency, retention, and morale. By aligning these indicators, businesses can enhance customer experiences, optimize agent performance, and achieve a seamless, cost-effective service transformation.

Product, Service, and Content Discovery

Generative AI transforms customer discovery through personalized recommendations and intuitive search experiences, particularly in the retail, QSR, and travel industries. Key metrics to track for AI agent success include Click-through rate (CTR), which evaluates the relevance of search results and recommendations. At the same time, time on site (TOS) reflects user engagement and satisfaction—longer TOS indicates deeper engagement, and shorter TOS on product pages indicates efficient discovery. Revenue per visit (RPV) gauges monetization effectiveness influenced by metrics like CTR, conversion rates, and cart size. Additionally, visit volume reveals meaningful insights into customer satisfaction, business growth, and the success of marketing campaigns.

Businesses can fine-tune their AI strategies by analyzing these metrics to enhance user engagement, drive efficient discovery data acquisition, and boost overall revenue, ensuring a seamless and impactful customer experience.

Adoption KPIs For AI Agents

The broad potential of gen AI has introduced the need for a new set of adoption metrics to track its adoption and use across organizations, emphasizing the importance of measuring AI success. Unlike predictive analytics and AI technologies, which are often integrated directly into an application, Measuring AI success hinges heavily on changes in human behaviour and acceptance. For example, AI agents in business are only effective if your customers engage with them. Similarly, AI-enabled employee productivity tools can only drive productivity gains if employees adopt them into their daily workflows.

Here are some of the most commonly used metrics:

- Adoption rate

The percentage of active users for a new AI application or tool. Movements in this metric can help identify if low adoption is caused by a lack of awareness (consistently low) or low performance (high adoption dropping to low). - Frequency of use

Measures how often many queries a user sends to a model on a daily, weekly, or monthly basis. This metric gives insight into the usefulness of an application and the types of usage. - Queries per session

Measures the average duration of a user's interaction with an AI model and may shed light on its entertainment value or effectiveness at retrieving answers. - Query length

The average number of words or characters per query. This metric illustrates how much context users submit to generate an answer. - Thumbs up or thumbs down feedback

Measures satisfaction and dissatisfaction with a customer's interaction. This metric can be leveraged as human feedback to refine future model responses and improve output quality.

Adoption metrics reveal the usage and impact of your generative AI application, showcasing its value and areas for improvement. Combine quantitative data with qualitative feedback and collected data, like surveys or focus groups, for a holistic view of accessibility, reliability, and usability of AI capabilities, ensuring greater effectiveness in daily operations.

Business Value KPIs

Proving the value of generative AI investments is a significant challenge for executives and business leaders. There is an increasing need to understand the impact of AI agents' ROI, which needs to be quantified. Business value metrics enhance operational and adoption metrics from existing systems, translating them into financial metrics that reflect the overall effect of AI initiatives on the organization.

The most common examples might include:

- Productivity value metrics

Captures productivity enabled by AI by measuring concrete improvements, such as average call handling times, document processing times, and time saved with tools. - Cost savings metrics

Illustrates IT and services efficiencies from AI applications by measuring legacy licensing costs against AI solutions, call and chat containment rates, and cost savings for hiring and onboarding. - Innovation and growth metrics

Assesses the role of AI in driving new products, services, or business models by measuring document processing capacity, knowledge extensibility, and improvements in work, communication, or asset quality. - Customer experience metrics

Captures the impact of AI on customer satisfaction and loyalty by measuring churn reduction, revenue uplift, visit volume, time on site, and more. - Resilience and security metrics

Evaluate how well gen AI can withstand disruption and protect sensitive data by measuring application downtime or scalability, reduced security risks, and improvements in detection and response.

How do you apply them?

After establishing a solid plan for understanding business operations and adopting KPIs, your finance team can translate these into financial impact metrics. Evaluating the costs of building and maintaining generative AI is essential to understand your ROI fully. Consider cost drivers such as data size, usage volume, number of models, and necessary resources for development and maintenance. Since generative AI costs can vary significantly, choose models that align with your performance, latency, and budget requirements.

Why KPIs Are Essential in AI Agent Implementation

KPIs are the key to a successful foundation for AI agents, offering measurable insights into their performance, adoption, and overall value to the business. As illustrated in real-world use cases, KPIs provide a structured framework to evaluate success.

Metrics like call and chat containment rates, average handle time, customer satisfaction, and churn rates enable organizations to assess how well the AI agent resolves inquiries, improves productivity, and enhances customer experiences. Businesses can identify bottlenecks, refine workflows, and optimize resource allocation by monitoring human and AI performance.

Moreover, KPIs bridge the gap between technical performance and business impact by tracking system reliability, operational efficiency, and ROI.

A comprehensive understanding of specific KPIs allows businesses to make data-driven decisions, ensuring AI solutions align with organizational goals and deliver maximum value.

Ultimately, businesses need to track the right KPIs and empower their enterprises to unlock the full potential of generative AI. We at Codiste can help drive agentic AI success and customer satisfaction. Reach out to us, and let us foster growth.

The Ultimate Guide to Agentic AI and Agentic Software Development

Know more

What is Agentic AI? A Beginner's Guide to the Future of Software Development

Know more

AI in Customer Service: Trends & Predictions for 2025

Know more