Machine learning will remain the largest component of the AI market in 2025 and will be leading at least till 2030. Machine learning (ML) model development is one of the key factors for using AI across different industries. Machine learning models are a way for companies to draw out information from big data, perform routines, and make complex decisions for them. This guide clearly explains the process of building machine learning models from setting business objectives to the final production of the model.

The initial and very essential step of developing a machine learning model is the identification of the purpose it will accomplish within the organization. The clearly defined goals and metrics that signify the success of the project simplify the ML development process. This way, the project will align with the business requirements and the company's overall ROI will be bigger.

Key elements in this stage include:

The technique of transfer learning, where a previously trained model is customized to a new task, is a useful tool in supervised learning scenarios. It allows you to lessen the requirement for giant labeled datasets and thus, the time required for the ML model development.

The next process involves figuring out the dataset with the specific aim in mind. Data exploration lets data scientists see the structure, trends, and connections in a dataset. It is this analysis that indicates the choice of the right machine-learning algorithm.

The option of an algorithm usually falls into these three categories:

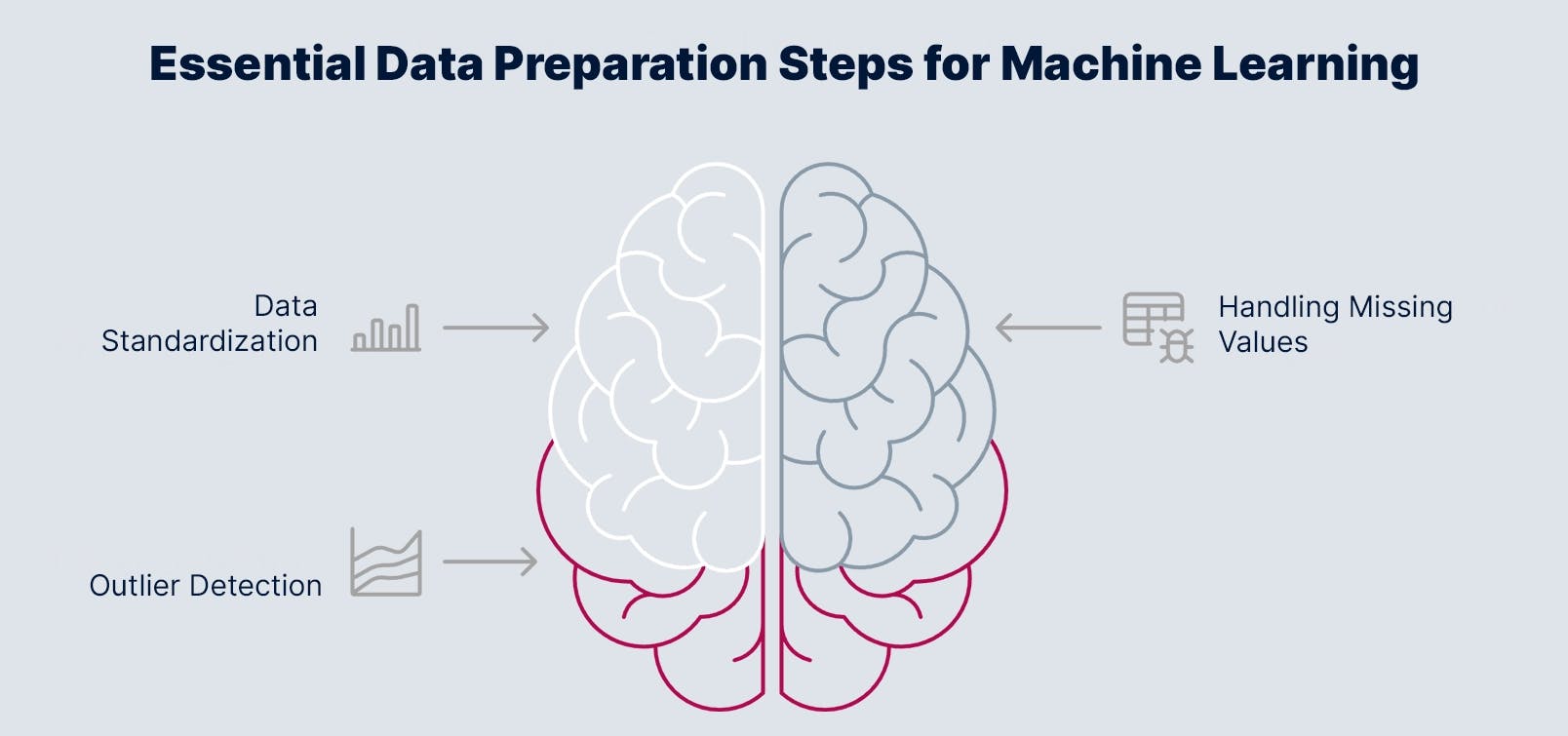

Data preparation is a critical yet demanding task involved in the ML model development process. It is responsible for the rightness of the dataset. There will be no inconsistencies that might adversely affect the performance of the model with a clean and error-free dataset.

Steps involved in data preparation include:

For supervised learning, data preparation in the future involves labeling the data as well, which can be time-consuming but it is a necessary process so the model can learn to detect patterns efficiently.

Speed up the development and deployment of your ML model with Codiste's customized services.

The dataset is divided into different training and testing data sets to check the adaptability of the model. In normal circumstances, 80% of the data is used for training, and the rest is saved for testing. This clear division into sections allows the model to be trained on a definite set, while its performance is assessed with the new data.

Besides, cross-validation is another step to be undertaken if overfitting is detected, whereby a model shows good results during training and fails when tested with new data. Methods to achieve cross-validation are:

Cross-validation is a way to prove that the model’s accuracy stays true to a large number of different parts of the dataset. This helps the model to be generalized to new data.

After training and validating the model, optimization is essential to improve accuracy and efficiency. ML Model Optimization involves tuning hyperparameters, which are the settings defined by the data scientist that influence model behavior but are not learned by the model itself.

Examples of hyperparameters include:

ML Model Optimization can be performed using algorithms such as Bayesian Optimization, which sequentially analyzes hyperparameters' effects on target functions to find the most effective settings. This systematic approach is faster and more efficient than manual trial-and-error optimization.

Deployment is the final step when the model is transferred from a local environment to a live production setting. In production, the model deals with real-time data and either gives helpful insights or runs the right processes as planned.

There are several approaches to deployment:

Tools like Seldon Core and Enterprise Platform provide MLOps (Machine Learning Operations) support, helping manage and monitor deployed models, ensuring stability and performance in a live environment.

Deployment is not the end of the journey; continuous monitoring is essential to maintain model accuracy over time. Models can experience "drift," where their predictions degrade due to changes in underlying data patterns, commonly seen in fields like finance and marketing.

Post-deployment practices include:

Platforms such as Seldon provide tools for monitoring model drift, enabling organizations to take corrective action promptly.

The future of machine learning model development will minimize the time and effort needed for building effective models. Automated tools will provide the opportunity for people to develop models without profound technical knowledge making machine learning accessible to more users and companies. Rather than manually training and testing supervised models, some companies are employing pre-trained models and frameworks to jump-start their projects. More businesses will be able to improve their services and products through the use of machine learning without having to spend a fortune or employ a technical team.

Another big change is that the main focus is on developing trustable and moral models. As machine learning has entered sectors like healthcare and finance, we should consider understanding the decision-making process of the models and the reduction of bias. Tools used in explainable AI can explain the reasons why a model comes to certain results, thus, enabling trust. With the increase in privacy rules, developers are also creating ways to secure data, such as training models from different sources without divulging sensitive information. This methodology will help machine learning to be both powerful and accountable in the future.

Machine learning model development is a complex procedure that includes data collection, algorithm selection, training, validation, and deployment. Every step is crucial to guarantee that the model forecasts correctly and is in line with business goals. Although some platforms like TensorFlow can make the MLOps part easier, it is the main issue, data quality, and continuous optimization that, in the end, make a machine learning project successful.

Codiste as a leading machine learning development company in the USA helps you to follow a structured approach and develop the best ML model using the right tools. Codiste helps clients create scalable, accurate ML models tailored to their unique needs. Their focus on transparency and model optimization ensures that each solution is not only powerful but also ethically aligned with industry standards. This makes Codiste a reliable ML model development partner in the journey toward advanced, responsible AI.

Every great partnership begins with a conversation. Whether you’re exploring possibilities or ready to scale, our team of specialists will help you navigate the journey.